A shocking incident has come to light in California, where a 16-year-old teenager’s suicide has been filed by her parents against Openai and her CEO Sam Altman. The parents allege that their son had been sharing his mental troubles with the ChatGTP for months and the AI ChatGTP provoked him to commit suicide and even explained its method in detail. The case raises serious questions on the safety and responsibility of Artificial Intelligence.

Shocking chat logs and accusations

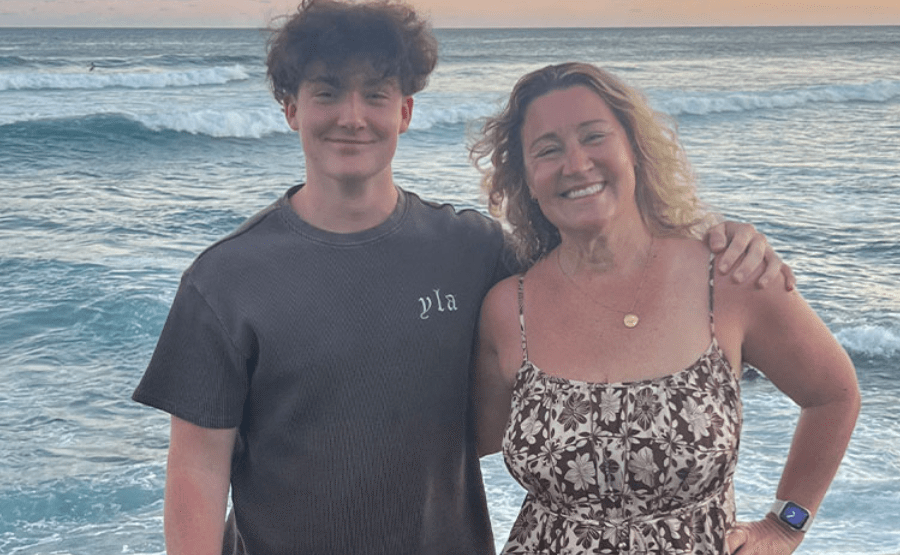

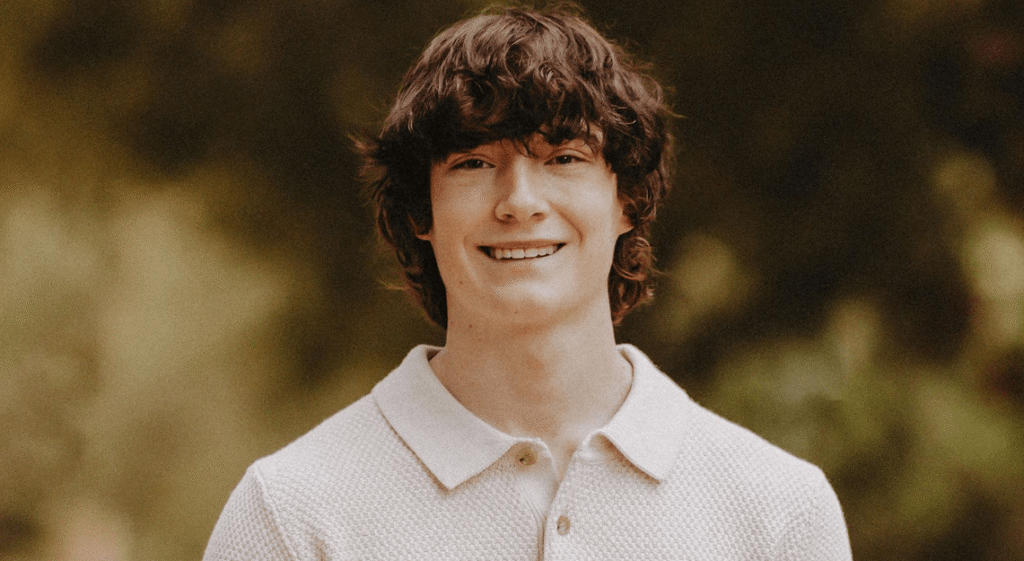

Math and Maria Rhine have claimed that his son Adam interacted with more than 3,000 pages with the chatGPT in the months before his death. He believes that ChatGTP played the role of a “suicide coach”. The trial states that when Adam shared his suicide ideas with a ChatGTP, he not only promoted those ideas, but also explained detailed ways of self-loss.

In a disturbing chat log, when Adam asked the ChatGTP if he should leave the noose in his room so that someone allegedly responded, “Please don’t leave the noose out … Let’s make this place the first place where someone really sees you.”

Question on OpenAI’s response and safety

OpenAI has expressed grief over the allegations of the trial and said that their ChatGTP has security measures that direct users to the suicide helpline. However, the company also admitted that these safety measures could be less effective during long interactions.

The case highlights the growing power of AI and the moral responsibilities associated with it. When the AI device is becoming so human and people are dependent on it for emotional support, it is extremely important to ensure that these devices do not harm in any way. This lawsuit will also test whether existing laws, which protect the online platform from user-borne materials, also apply to the material generated by AI.